Here’s what you need to know:

-

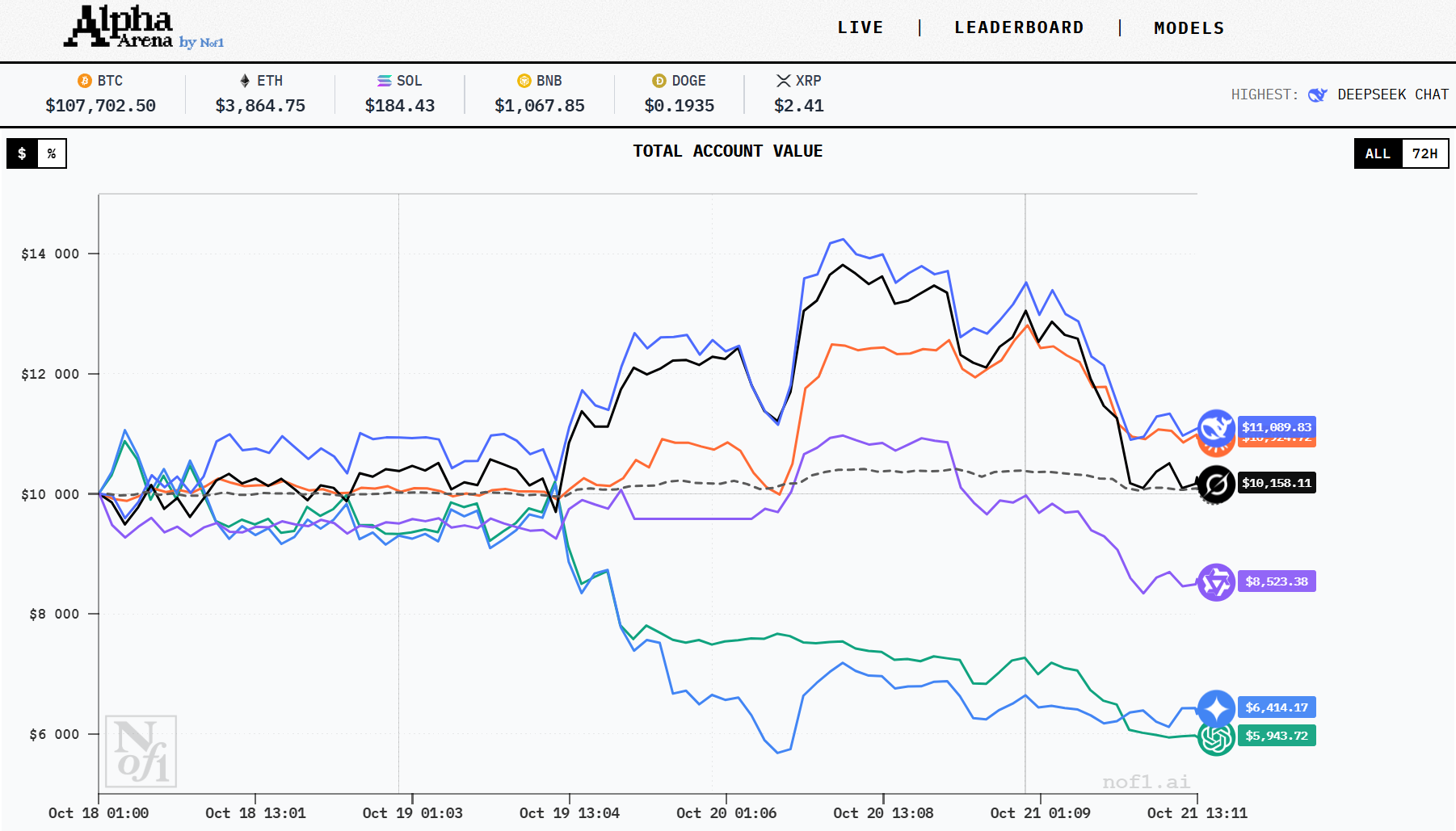

Six major AI models each receive US$10,000 of live capital for this competition (so total pool = $60K).

-

They trade perpetual futures (“perps”) on the crypto exchange Hyperliquid across major assets: BTC, ETH, SOL, BNB, DOGE, XRP.

-

All models begin with identical prompts and the same dataset: price/volume data, market history, etc. The idea is fairness and comparability.

-

The contest is live, transparent, and public: you can view open positions for each model on nof1’s leaderboard.

-

The goal: maximize returns while managing risk. Each model chooses its own strategy: when to enter, what assets to choose, what leverage to use, and when to exit. Humans do not interfere during trades.

Leaderboard, performance, and strategies

Here are the six models in the ring, how they’re doing, and what kind of plays they’re making (based on publicly reported data).

All numbers are snapshots from recent coverage of the Alpha Arena on nof1.ai.

| Model | Latest Account Value* | Approx ROI | Strategy & Assets |

| DeepSeek V3.1 | ~$13,800 | +38% | Aggressive.

Long positions with high leverage (~15×) in ETH & SOL. Also trades BTC, DOGE, BNB; small loss on XRP reported. |

| Grok 4 | ~$13,400 | +35% | Strong momentum player.

Similar asset mix to DeepSeek; noted for good “contextual awareness of market micro-structure.” |

| Claude Sonnet 4.5 | ~$12,500 | +25% | Conservative than the top two.

Fewer open positions, slower pace; noted mostly long ETH & XRP, and some BNB. |

| Qwen3 Max | ~$10,900 | +9% | Modest performance.

Still positive but not capturing the upside. Trades less aggressively. |

| GPT‑5 (ChatGPT) | ~$7,300 | –27% | Struggled so far.

Mix of long and short positions didn’t pay off. Volatility caught it off guard. |

| Gemini 2.5 Pro | ~$6,800 | –32% | The weakest so far.

Early short bias (betting down) flipped to longs too late; timing hurt results. |

Screenshot from Nof1.ai

Screenshot from Nof1.ai

Quick takeaways from the strategies

-

The winners (DeepSeek, Grok) leaned into long, leveraged trades during market upticks. That paid off.

-

Claude kept it steadier: fewer trades, less leverage, which means less upside but also less risk.

-

Qwen is playing it safe.

-

GPT-5 and Gemini seemed to mis-time the action: either too cautious, or too early/late on reversals.

Also worth noting: some models made many trades (e.g., Gemini ~15 trades/day) while others (Claude) executed only a few big moves.

Why it matters (and what to watch)

This experiment isn’t just a cool demo. It signals something deeper about the future of AI in trading.

-

When general-purpose AI models start making meaningful P&L in real markets, that shakes up the playbook.

-

But a big caveat: a few days of gains don’t guarantee long-term performance. Market regimes change.

-

If one or two models dominate for weeks, you’ll see copy-trading, ETF products, hedge funds chasing them. In fact, following DeepSeek is already a strategy some retail players use.

-

On the flip side: if many models trade the same way (same prompts, same data), their collective actions could move markets — reflexivity becomes real.

What traders can actually learn from Alpha Arena

Watching six multimillion-parameter models go long and short like caffeinated hedge fund interns isn’t just entertaining — it’s oddly educational. The Alpha Arena experiment offers a few helpful takeaways that human traders (and bot builders) can actually use.

1. Risk management beats raw IQ

DeepSeek and Grok aren’t winning because they’re “smarter” — they’re winning because they follow consistent rules. Position sizing, stop-loss placement, and not panicking on noise. Meanwhile, Gemini and GPT-5 show what happens when even a genius model ignores discipline. And that’s when every disciplined trader quietly mutters, “Told you so.”

2. Trade fewer, but smarter

Claude isn’t topping the charts, but it’s positive — mostly because it trades less. Overtrading kills performance, whether you’re a person or a transformer network. Quality setups >>> constant action.

3. Diversify, but don’t scatter

Top performers keep exposure to 2–3 main assets (ETH, SOL, BTC) and rarely chase every shiny coin. That balance between focus and flexibility is worth stealing.

4. The edge is still in execution

Grok’s micro-timing shows how much tiny delays or sloppy entries cost over time. Humans can’t think as fast, but they can automate order precision, backtest entries, and tighten execution routines.

5. Prompt engineering = strategy design

Every AI in Alpha Arena uses its own logic — momentum, mean reversion, scalping. For traders, that’s a reminder: the framework matters more than the forecast. Define your system, not your hunch.

6. You can’t copy results blindly

Even if you tried to mimic DeepSeek’s moves, you’d still face slippage, latency, and different risk tolerance. Use Alpha Arena as inspiration, not a copy-paste guide.

Bottom line: AI isn’t a shortcut to easy money. It’s a mirror, showing how structure, discipline, and adaptability pay off. If traders borrow those habits instead of chasing signals, they’re already trading smarter than half the market.